November 13, 2025

By Bob O'Donnell

Ask virtually anyone about what’s currently going on in the tech industry and they’ll be able to answer you: the AI is coming, the AI is coming. But as obvious as that high-level trend may be, what hasn’t been as clear is the fact that it’s a Hybrid AI model—involving the major public cloud providers, on-premise data centers/private clouds and edge computing devices—that is driving the biggest change. In order to better understand this critical development, TECHnalysis Research commissioned a survey of over 1,000 IT decision-makers from medium and large organizations across ten US industries. The results are compelling. What the study findings reveal is that the era of “cloud-first” thinking for AI workloads and applications is rapidly giving way to a more nuanced, hybrid approach—one that balances the strengths of public cloud, private infrastructure, and edge computing.

For years, the public cloud was heralded as the ultimate destination for enterprise workloads, promising scalability, flexibility, and cost savings. Yet as artificial intelligence has become central to business strategy, organizations are re-evaluating where their most critical workloads should reside. The survey data shows that a massive wave of companies is actively moving AI workloads back from the public cloud to private infrastructure, driven by concerns over cost, security, and privacy. This isn’t a retreat from innovation; it’s a sign of market maturity. Enterprises are now seeking a “cloud-smart” strategy, leveraging the public cloud for training, private cloud for sensitive data, and edge computing for real-time inference.

Let’s look at the numbers. Eighty percent of organizations in the study have either already repatriated AI workloads, are actively planning to, or are considering it. Only a fifth say they have no plans to move anything back on-prem. That’s not a fringe reaction—it’s the market maturing. And it’s backed with budget: more than three-quarters expect on-premises AI infrastructure investment to rise over the next one to three years. In other words, capital is shifting toward local compute.

To be clear, this doesn’t mean companies are abandoning the cloud for AI workloads. In fact, for today’s most popular GenAI and ML use cases—including content generation, predictive analytics and code generation—public cloud remains the dominant venue. The nuance is that it’s no longer the only venue—and for certain categories, it’s no longer the best. Robotics and automation already lean edge-first, for example, a reminder that physical-world use cases have very different demands than many other areas.

The net result is that more than 80% of IT decision-makers believe a hybrid architecture is important for their organization, and nearly three-quarters are actively pursuing or developing plans for hybrid AI deployments. The top drivers for this shift are clear. Cost reduction is paramount, as running all AI workloads in the public cloud—especially generative AI—has proven expensive. Data security and compliance are essential, particularly for regulated industries, and keeping sensitive information on-premises or on-device is now a critical requirement for many new AI applications.

According to the survey, 80% of organizations have either already repatriated AI workloads, are actively planning to, or are considering it. Only 20% have no plans to do so. The top reasons for this migration mirror the drivers for hybrid AI: cost control, privacy, and security. Many organizations also want to leverage investments they’ve already made in on-premises infrastructure, and over 77% expect their spending on on-premises AI infrastructure to increase over the next one to three years.

But hybrid AI isn’t just about the cloud and the data center—it’s also about the edge. Nearly 60% of organizations have already extended AI to edge devices or are in the planning stages. The benefits of edge AI are distinct: real-time performance, personalization, and improved data privacy by processing locally. The challenges, however, are significant. Device resource constraints, managing and updating models across thousands of distributed devices, developing edge-optimized AI models, and ensuring data security and privacy at the edge are all top concerns.

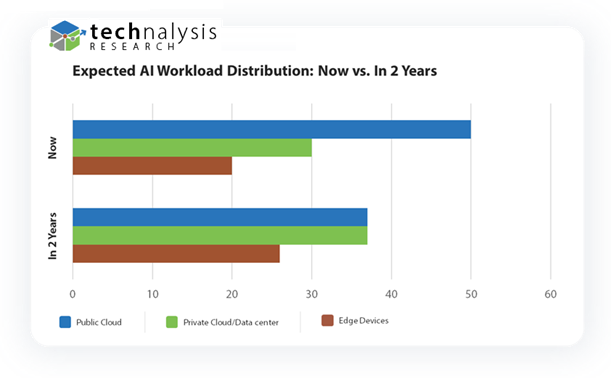

One of the most surprising results of the study stems from how people see trends toward Ai workload distribution evolving. look at expectations two years out. As Figure 1 illustrates, respondents forecast a roughly balanced, three-way split of AI workloads across public cloud, private cloud (or on-prem data centers), and edge devices.

Fig. 1

This is a profound change. It implies that infrastructure teams, data guardians, and app developers will need to think horizontally—about building and governing AI systems designed from the start to land in multiple places with consistent security, observability, and lifecycle management. It also means enterprises will need to standardize on ways to move models and data between environments without friction.

Another surprising finding from the study is the perceived importance of NPUs for AI PCs that can serve as critical edge devices. Despite a slower-than-hoped start for AI PC software, the awareness and intent signals are unmistakable. Eighty-five percent of decision-makers say that on-device NPUs are important to their AI applications today, and that climbs past ninety percent when they look two years out.

The market has moved past “cloud-first” to “cloud-smart,” balancing workloads based on specific needs. The key drivers—cost, security, and privacy—are universal, and the future is a balanced three-way split across public cloud, private data centers, and edge devices. Hybrid AI is no longer a niche strategy—it’s the new default for enterprise computing.

The Executive Summary for the TECHnalysis Research study “The Future of AI is Hybrid” is available for free here.

Here’s a link to the original column: https://www.linkedin.com/pulse/world-enterprise-ai-turning-hybrid-bob-o-donnell-k1mjc

Bob O’Donnell is the president and chief analyst of TECHnalysis Research, LLC a market research firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on LinkedIn at Bob O’Donnell or on Twitter @bobodtech.

|